Summer School Session 4: Tracking Methods - Meanshift, Camshift and Kalman Filter

In this post we will be going over the content covered during the fourth Summer School Session

The jupyter notebook for the same can be found here

What is object tracking ?

Simply put,it is the process of locating a moving object (or multiple objects) over time using a camera.

Why study object tracking ?

Object tracking is useful in a variety of applications,such as human-computer interaction, security and surveillance , medical imaging,augmented reality,etc.

Histogram backprojection :

Histogram backprojection, in simple words , creates an image of the same size (but of a single channel) as that of our input image, where each pixel corresponds to the probability of that pixel belonging to our object ie. the output image will have our object of interest in more white compared to remaining part. It is used to find objects of interest in an image or other image segmentation applications.

How do we get it?

First , we create a histogram of an image containing our object of interest. A colour histogram is preferred over a grayscale one as it contains more information about the object . We then “back-project” this histogram over our test image where we need to find the object, ie ,we calculate the probability of every pixel belonging to the target object and show it. The resulting output on proper thresholding gives us the object of interest. To understand what we mean by “back-project” consider a case when our image is

Implementation :

First we need to calculate the color histogram of both the object we need to find (let it be \(O\) ) and the image where we are going to search for the object(let it be \(I\) )

import cv2

import numpy as np

from matplotlib import pyplot as plt

#roi is the region of object we need to find

roi = cv2.imread('patch.jpg')

hsv = cv2.cvtColor(roi,cv2.COLOR_BGR2HSV)

#target is the image we search in

target = cv2.imread('messi5.jpg')

hsvt = cv2.cvtColor(target,cv2.COLOR_BGR2HSV)

# Find the histograms using calcHist. Can be done with np.histogram2d also

roihist = cv2.calcHist([hsv],[0, 1], None, [180, 256], [0, 180, 0, 256] )

cv2.normalize(roihist,roihist,0,255,cv2.NORM_MINMAX)

We then find the ratio histogram \(R\) defined for each bin \(j\) as

\[R_{j} = min( \frac{O_{j}}{I_{j}}, ~1 )\]This is done by the built in function cv2.calcBackProject()

R=cv2.calcBackProject([hsvt],[0,1],roihist,[0,180,0,256],1)

This backprojected image is then convolved with a mask, which for an object of compact shape and unknown orientation can be a circle of same area as that of the object.

# convolution with a circular disc, B = D * B, where D is the disc kernel

disc = cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(5,5))

cv2.filter2D(R,-1,disc,R)

R = np.uint8(R)

cv2.normalize(R,R,0,255,cv2.NORM_MINMAX)

Now the location of maximum intensity gives us the location of object. If we are expecting a region in the image, thresholding for a suitable value gives a nice result.

ret,R = cv2.threshold(R,50,255,0)

res=cv2.merge((R,R,R))

res=cv2.bitwise_and(res,target)

cv2.imshow('result',res)

cv2.waitKey(0) & 0xFF

cv2.destroyAllWindows()

Meanshift

Simply put, meanshift is an analysis technique for locating the maxima of a density function, a so-called mode-seeking algorithm. In the context of visual tracking , the meanshift algorithm , based on the color histogram of the object in the previous image, is used to find the peak of a probability density function near the object’s old position. We will give a brief overview of the underlying mathematics behind the procedure , and then develop a basic intuition of how it is useful for us , in visual tracking.

Underlying mathematics

Meanshift is a procedure to locate the maxima of a density function . First , we start with an initial estimate \(\displaystyle x\) . Let a kernel function \(\displaystyle K(x_{i}-x)\) be given. This function determines the weight of nearby points \(x_i\) for re-estimation of the mean. (In the case of visual tracking , “nearby” points constitutes all points falling within the target window ). Typically a Gaussian kernel on the distance to the current estimate is used, \(\displaystyle ie.~ K(x_{i}-x)=e^{-c \left\| x_{i}-x \right\| ^{2}}\). The weighted mean of the density in the window determined by \(\displaystyle K\) is

\(\displaystyle m(x)= \frac {\sum _{x_{i}\in N(x)} K(x_i-x)x_i} {\sum _{x_{i}\in N(x)}K(x_i-x)}\) where \({\displaystyle N(x)}\) is the neighbourhood of \({\displaystyle x}\) ,and in our case the set of points within the target window .

The difference \(\displaystyle m(x) -x\) is called the mean shift . The algorithm now sets \(x \leftarrow m(x)\) and continues till \(m(x)\) converges .

Intuition

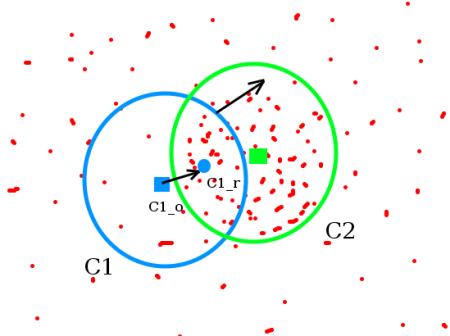

Consider you have a set of points. (It can be a pixel distribution like

histogram backprojection). You are given a small window ( may be a circle) and you have to move that window to the area of maximum pixel density (or maximum number of points). It is illustrated in the simple image given below:

The initial window is shown in blue circle with the name C1 . Its original center is marked in blue rectangle, named C1_o . But if you find the centroid of the points inside that window, you will get the point “C1_r” (marked in small blue circle) which is the real centroid of window. Surely they don’t match. So move your window such that circle of the new window matches with previous centroid. Again find the new centroid. Most probably, it won’t match. So move it again, and continue the iterations such that center of window and its centroid falls on the same location (or with a small desired error). So finally what you obtain is a window with maximum pixel distribution. It is marked with green circle, named C2 . As you can see in image, it has maximum number of points.

Essentially, the working of the function can be summarized as follows :

1.We pass the initial location of our target object and the histogram backprojected image to the meanshift function

2.As the object moves ,the histogram backprojected image also changes.

3.The meanshift function moves the window to the new location with maximum probability density .

OpenCV Implementation

To use meanshift in OpenCV, first we need to setup the target and find its histogram so that we can backproject the target on each frame for calculation of meanshift. We also need to provide initial location of window. For histogram, only hue is considered here. Also, to avoid false values due to low light, low light values are discarded using cv2.inRange() function.

Let us first download the video which we are going to use for the camshift Code.

Note : Please go to the terminal and type this to download the all round Youtube Downloader Software.

sudo apt-get install youtube-dl

import numpy as np

import cv2

cap = cv2.VideoCapture('file slow.flv in open cv-c99kI_2wHuo.mp4')

# take first frame of the video

ret,frame = cap.read()

cv2.imshow('frame',frame)

cv2.waitKey(0) & 0xFF

# setup initial location of window

r,h,c,w = 127,50,193,75# simply hardcoded the values

track_window = (c,r,w,h)

# set up the ROI for tracking

roi = frame[r:r+h, c:c+w]

cv2.imshow('roi',roi)

hsv_roi = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

mask = cv2.inRange(roi, np.array((135.,135.,135.)), np.array((360.,255.,255.)))

#mask = cv2.inRange(hsv_roi, np.array((0., 60.,32.)), np.array((180.,255.,255.)))

#roi_hist = cv2.calcHist([hsv_roi],[0],mask,[180],[0,180])

roi_hist = cv2.calcHist([roi],[0],mask,[180],[0,180])

cv2.normalize(roi_hist,roi_hist,0,255,cv2.NORM_MINMAX)

# Setup the termination criteria, either 10 iteration or move by atleast 1 pt

term_crit = ( cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 1 )

while(1):

ret ,frame = cap.read()

if ret == True:

hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV)

dst = cv2.calcBackProject([hsv],[0],roi_hist,[0,180],1)

#cv2.imshow('dst',dst)

#cv2.waitKey(0)

# apply meanshift to get the new location

ret, track_window = cv2.meanShift(dst, track_window, term_crit)

# Draw it on image

x,y,w,h = track_window

img2 = cv2.rectangle(frame, (x,y), (x+w,y+h), 255,2)

cv2.imshow('frame',img2)

k = cv2.waitKey(0) & 0xff

if k == 27:

break

else:

cv2.imwrite(chr(k)+".jpg",img2)

else:

break

cv2.destroyAllWindows()

cap.release()

Limitation

The size of the target window does not change. Thus , when the target object is approaching closer to the camera, the algorithm is unable to track the object effectively . The target window needs to adapt to the change in size and rotation of the object .

CAMshift

Continuously Adaptive Meansift , or CAMshift is an improvement over the mean shift algorithm for better visual tracking. In essence , it performs almost exactly what meanshift does,but returns a resized and rotated target window .

What does it do??

It applies meanshift first. Once meanshift converges, it updates the size of the window as, \(s=2\sqrt\frac{M_{00}}{256}\) ( Here , \(M_{oo}\) refers to the probability of finding the object in the window) It also calculates the orientation of best fitting ellipse to it.(This is to obtain the angle at which the target window must be inclined) Again it applies the meanshift with new scaled search window and previous window location. The process is continued until required accuracy is met

Implementation :

import numpy as np

import cv2 as cv

cap = cv.VideoCapture('file slow.flv in open cv-c99kI_2wHuo.mp4')

# take first frame of the video

ret,frame = cap.read()

# setup initial location of window

r,h,c,w = 127,50,193,55 # simply hardcoded the values

track_window = (c,r,w,h)

# set up the ROI for tracking

roi = frame[r:r+h, c:c+w]

cv2.imshow('roi',roi)

hsv_roi = cv.cvtColor(roi, cv.COLOR_BGR2HSV)

mask = cv.inRange(roi, np.array((155., 155.,155.)), np.array((360.,255.,255.)))

roi_hist = cv.calcHist([hsv_roi],[0],mask,[180],[0,180])

cv.normalize(roi_hist,roi_hist,0,255,cv.NORM_MINMAX)

# Setup the termination criteria, either 10 iteration or move by atleast 1 pt

term_crit = ( cv.TERM_CRITERIA_EPS | cv.TERM_CRITERIA_COUNT, 10, 1)

while(1):

ret ,frame = cap.read()

if ret == True:

hsv = cv.cvtColor(frame, cv.COLOR_BGR2HSV)

dst = cv.calcBackProject([hsv],[0],roi_hist,[0,180],1)

# apply meanshift to get the new location

rect, track_window = cv.CamShift(dst, track_window, term_crit)

# Draw it on image

cv.imshow('dst',dst)

cv.waitKey(0)

pts = cv.boxPoints(rect)

pts = np.int0(pts)

img2 = cv.polylines(frame,[pts],True, 255,2)

cv.imshow('img2',img2)

k = cv.waitKey(0) & 0xff

if k == 27:

break

else:

cv.imwrite(chr(k)+".jpg",img2)

else:

break

cv.destroyAllWindows()

cap.release()

Kalman Filters

Kalman filtering, is an algorithm that uses a series of measurements observed over time, containing statistical noise and other inaccuracies, and produces estimates of unknown variables that tend to be more accurate than those based on a single measurement alone.

In general ,Kalman filters find applications in guidance and navigation,computer vision systems and in sginal processing. Kalman filters can prove to be useful in two interesting cases:

-

The variables of interest can only be measured indirectly

-

Measurements available from various sensors are prone to noise.

One Dimensional Kalman Filter

Here are the required imports for implementing a one dimensional Kalman Filter

from matplotlib import pyplot as plt

import numpy as np

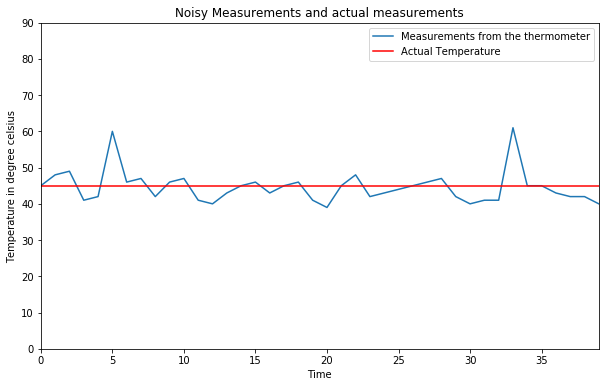

Let us write a code for the one-dimensional Kalman filters first. Consider the case in which we measure the temperature from an erraneous thermometer.

The following are the measurements taken from an erraneous thermometer at different times , also we assume that we know that the actual temperature is 45 degrees.

measurement = [45,48,49,41,42,60,46,47,42,46,47,41,40,43,45,46,43,45,46,41,39,45,48,42,43,44,45,46,47,42,40,41,41,61,45,45,43,42,42,40]

actual = 45

Let us plot these measurements using matplotlib

plt.title('Noisy Measurements and actual measurements')

plt.ylabel('Temperature in degree celsius')

plt.xlabel('Time')

plt.plot(measurement,label = 'Measurements from the thermometer')

plt.hold(True)

plt.axhline(y=actual, color='r', linestyle='-',label = 'Actual Temperature')

plt.axis([0,len(measurement)-1,0,90])

plt.legend()

plt.show()

Now let’s run the code for the Kalman Filtering process.

initial_estimate = 60 # actually this can be anything

initial_error_estimate = 2

error_in_measurement = 4

current_estimate = initial_estimate

current_error_estimate = initial_error_estimate

kalman_output_list = []

kalman_output_list.append(initial_estimate)

for i in range(len(measurement)):

####################################

# Step 1 , calculate the Kalman Gain.

kalman_gain = (current_error_estimate)/(current_error_estimate + error_in_measurement)

####################################

# Step 2 , calculate the estimate at time 't' from the measurement and estimate at time 't-1'

kalman_output = (current_estimate + (kalman_gain)*(measurement[i] - current_estimate))

kalman_output_list.append(kalman_output)

####################################

# Step 3 , calculate the error estimate at time 't'

new_error_estimate = (1-kalman_gain)*(current_error_estimate)

##############################################

# make the previous variables the current variables for the next iteration

current_estimate = kalman_output

current_error_estimate = new_error_estimate

##############################################

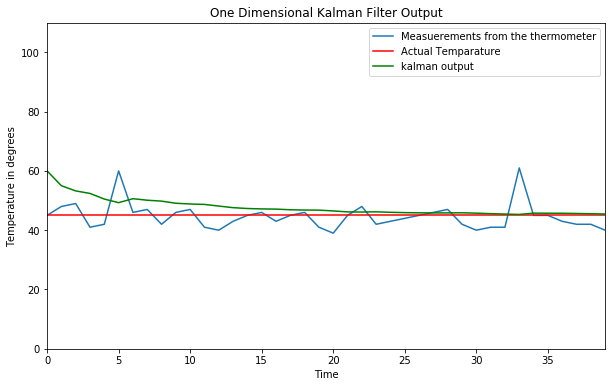

Now we have the Kalman Filters output with us.

Lets finally plot them and check our results.

plt.title('One Dimensional Kalman Filter Output')

plt.xlabel('Time')

plt.ylabel('Temperature in degrees')

plt.plot(measurement,label = 'Measuerements from the thermometer')

plt.axhline(y=actual, color='r', linestyle='-',label = 'Actual Temparature')

plt.hold(True)

plt.plot(kalman_output_list,'-g',label = 'kalman output')

plt.axis([0,len(measurement)-1,0,110])

plt.legend()

plt.show()

Now that we are done with the one dimensional Kalman Filters , lets move on to the multi-dimensional case

![]()

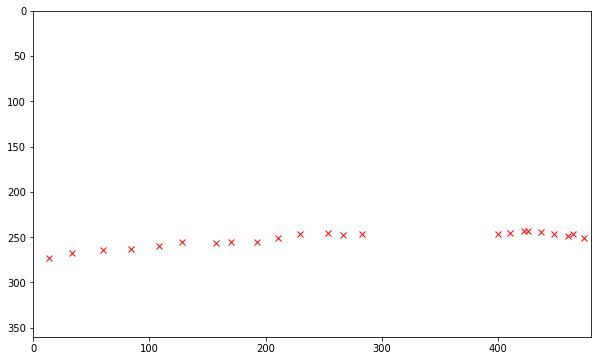

The following code demonstrates tracking of a ball,rolling across the scene with the help of Kalman Filter algorithm used for object tracking

Here are the required imports

import matplotlib.pyplot as plt

import cv2

import numpy as np

Note : Please download the video from this link since we will be using it for our code , also ensure that it is in the same directory as this notebook

The video, which contains the tracking scenario is singleball.mov. This file is imported using opencv’s VideoCapture class. You can use this link for downloading the video !!!!!

A matplotlib figure is initialized. Within the following loop for each frame the location of the detected object will be plotted into this figure:

plt.figure()

plt.hold(True)

plt.axis([0,cap.get(3),cap.get(4),0])

count = 0 # for counting the number of frames

cap = cv2.VideoCapture(file)

bgs = cv2.createBackgroundSubtractorMOG2()

# Let us make an array for storing the values of (x,y) co-ordinates of the ball

# If the ball is not visible in the frame then keep that row as [-1.0,-1.0]

# Thus lets initialize the array with rows of [-1.0 , -1.0]

measuredTrack=np.zeros((int(numframes),2))-1

while count<(numframes):

count+=1

ret,img2 = cap.read()

cv2.imshow("Video",img2)

cv2.namedWindow("Video",cv2.WINDOW_NORMAL)

foremat=bgs.apply(img2,learningRate = 0.01)

cv2.waitKey(20)

ret,thresh = cv2.threshold(foremat,220,255,0)

im2 , contours, hierarchy = cv2.findContours(thresh,cv2.RETR_TREE,cv2.CHAIN_APPROX_SIMPLE)

#print(len(contours)) ## This prints the number of contours (foreground objects detected)

if len(contours) > 0:

for i in range(len(contours)):

area = cv2.contourArea(contours[i]) ## Calculates the Area of contours

if area > 100: ## We check this because the area of the ball is bigger than 100 and we want to plot that only

m= np.mean(contours[i],axis=0) ### mean is taken for finding the centre of the contour (ball in this case)

measuredTrack[count-1,:]=m[0]

plt.plot(m[0,0],m[0,1],'xr')

cv2.imshow('Foreground',foremat)

cv2.namedWindow("Foreground",cv2.WINDOW_NORMAL)

cv2.waitKey(80)

cap.release()

cv2.destroyAllWindows()

print(measuredTrack)

### save the trajectory of the ball in a numpy file , so that it can be used

### later to be passed as an input to the Kalman Filter process.

np.save("ballTrajectory", measuredTrack)

plt.axis((0,480,360,0))

plt.show()

The following loop iterates over all frames of the video sequence.

Each frame is plotted to the opencv figure video. Then the apply()-method of the background subtractor instance is called, this method returns a foreground mask for the current frame.

By applying a threshold to the foreground mask it is converted into a binary image, containing 1 at all pixels which belong to the foreground and 0 at all pixels belonging to background. An area of connected foreground pixels is a foreground object.

The contours of foreground objects can be determined by applying opencv’s findContours() function. In general there can exist more than one foreground objects and corresponding contours in a frame.

Explaination of the above code :

1) The centroid of the contour is assumed to be the location of the foreground object.

2) This location is tracked for each frame. The location is plotted into the matplotlib figure.

3) Moreover, for each frame the location of the tracked object is stored in the numpy array measuredTrack.

4) Finally the numpy array measuredTrack is stored to a file. The contents of this file (i.e. the measured track) constitute the input for the Kalman Filter. The Kalman Filter is implemented in another python module (see Kalman Filter ) and provides a more accurate track of the moving object.:

The track measured above shall be refined by Kalman filtering. Even though a Kalman Filter is implemented in opencv, we apply the Kalman Filter module pykalman due to its better documentation.

So lets install pykalman first.

Import the”KalmanFilter” library from this amazing module

from pykalman import KalmanFilter

Load the saved “ballTrajectory.npy” file

Measured = np.load("ballTrajectory.npy")

Remove the First part of the video from the Measured array when the ball was not there in the video.

So we need to remove all the rows of [ -1.0,-1.0 ] untill the first measurement is recorded (the time when the ball enters the video)

If you noticed closely the ball enters in the video sometime after the video starts

while True:

if Measured[0,0]==-1.:

Measured=np.delete(Measured,0,0)

else:

break

numMeas=Measured.shape[0]

However as you can clearly see there are some parts of this array still have the [-1.0 , -1.0] rows left

These indicate the parts of the video where the ball was inside the box and also the parts of the video after the ball is gone completely out of the vision

By applying Numpy Masked Arrays these positions without measurement can be particullarly marked and the following Kalman Filter module is able to interprete these positions as missing-measurement position.:

Thus at these positions we can use the help of the Kalman FIlter algorithm to predict the position of the ball even when it is behind the box or even out of the video completely !!!!

MarkedMeasure=np.ma.masked_less(Measured,0)

In this demonstration the state is modeled as a vector, containing the variables

x-coordinate of current position: x

y-coordinate of current position: y

current speed in x-direction: vx

current speed in y-direction: vy

The measured parameters are x and y. Thus the transition matrix (process model) and the observation matrix (measurement model) are:

Transition_Matrix=[[1,0,1,0],[0,1,0,1],[0,0,1,0],[0,0,0,1]] # A matrix

Observation_Matrix=[[1,0,0,0],[0,1,0,0]] # H matrix

Besides these two models the Kalman Filter requires:

1) an initial state, defined by xinit,yinit,vxinit,vyinit

2) an initial state covariance initstatecovariance, which describes the certainty of the initial state.

3) a transition covariance, which describes the certainty of the process model.

4) a observation covariance, which describes the certainty of the measurement model.:

xinit=MarkedMeasure[0,0] ## First Measurement of x-coord

yinit=MarkedMeasure[0,1] ## First Measurement of y-coord

vxinit=MarkedMeasure[1,0]-MarkedMeasure[0,0] ## as v = (d_1 - d_0)/(time)

vyinit=MarkedMeasure[1,1]-MarkedMeasure[0,1]

initstate=[xinit,yinit,vxinit,vyinit]

initcovariance=1.0e-3*np.eye(4)

transistionCov=1.0e-4*np.eye(4)

observationCov=1.0e-1*np.eye(2)

kf=KalmanFilter(transition_matrices=Transition_Matrix,

observation_matrices =Observation_Matrix,

initial_state_mean=initstate,

initial_state_covariance=initcovariance,

transition_covariance=transistionCov,

observation_covariance=observationCov)

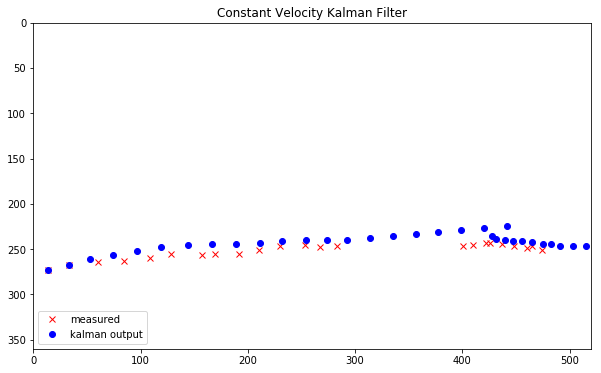

By calling the filter()-method of the KalmanFilter object the track (filtered_mean_state) and its certainty in form of filtered_state_covariances are computed.

The resulting track is plotted to a matplotlib-figure, which is shown below.:

(filtered_state_means, filtered_state_covariances) = kf.filter(MarkedMeasure)

plt.plot(MarkedMeasure[:,0],MarkedMeasure[:,1],'xr',label='measured')

plt.axis([0,520,360,0])

plt.hold(True)

plt.plot(filtered_state_means[:,0],filtered_state_means[:,1],'ob',label='kalman output')

plt.hold(True)

plt.legend(loc=3)

plt.title("Constant Velocity Kalman Filter")

plt.show()

Kalman Filtering based Cam-shift Object tracking

keep_processing = True;

camera_to_use = 0; # 0 if you have one camera, 1 or > 1 otherwise

selection_in_progress = False; # support interactive region selection

# select a region using the mouse

boxes = [];

current_mouse_position = np.ones(2, dtype=np.int32);

def on_mouse(event, x, y, flags, params):

global boxes;

global selection_in_progress;

current_mouse_position[0] = x;

current_mouse_position[1] = y;

if event == cv2.EVENT_LBUTTONDOWN:

boxes = [];

# print 'Start Mouse Position: '+str(x)+', '+str(y)

sbox = [x, y];

selection_in_progress = True;

boxes.append(sbox);

elif event == cv2.EVENT_LBUTTONUP:

# print 'End Mouse Position: '+str(x)+', '+str(y)

ebox = [x, y];

selection_in_progress = False;

boxes.append(ebox);

def center(points):

x = (points[0][0] + points[1][0] + points[2][0] + points[3][0]) / 4.0

y = (points[0][1] + points[1][1] + points[2][1] + points[3][1]) / 4.0

return np.array([np.float32(x), np.float32(y)], np.float32)

# this function is called as a call-back everytime the trackbar is moved

# (here we just do nothing)

def nothing(x):

pass

windowName = "Kalman Object Tracking" # window name

windowName2 = "Hue histogram back projection" # window name

windowNameSelection = "initial selected region"

Ok now enough of User Interface handling

Let’s get to the main Kalman filtering and Camshift part of the code.

First let us initialize all the Matrices of our Kalman Filter model

kalman = cv2.KalmanFilter(4,2)

kalman.measurementMatrix = np.array([[1,0,0,0],

[0,1,0,0]],np.float32)

kalman.transitionMatrix = np.array([[1,0,1,0],

[0,1,0,1],

[0,0,1,0],

[0,0,0,1]],np.float32)

kalman.processNoiseCov = np.array([[1,0,0,0],

[0,1,0,0],

[0,0,1,0],

[0,0,0,1]],np.float32) * 0.03

measurement = np.array((2,1), np.float32)

prediction = np.zeros((2,1), np.float32)

# if command line arguments are provided try to read video_name

# otherwise default to capture from the webcam of your laptop

import sys

import math

if (((len(sys.argv) == 2) and (cap.open(str(sys.argv[1]))))

or (cap.open(camera_to_use))):

# create window by name (note flags for resizable or not)

cv2.namedWindow(windowName, cv2.WINDOW_NORMAL);

cv2.namedWindow(windowName2, cv2.WINDOW_NORMAL);

cv2.namedWindow(windowNameSelection, cv2.WINDOW_NORMAL);

# set sliders for HSV selection thresholds

s_lower = 60;

cv2.createTrackbar("s lower", windowName2, s_lower, 255, nothing)

s_upper = 255;

cv2.createTrackbar("s upper", windowName2, s_upper, 255, nothing)

v_lower = 32;

cv2.createTrackbar("v lower", windowName2, v_lower, 255, nothing)

v_upper = 255;

cv2.createTrackbar("v upper", windowName2, v_upper, 255, nothing)

# set a mouse callback

cv2.setMouseCallback(windowName, on_mouse, 0)

cropped = False

# Setup the termination criteria for search, either 10 iteration or

# move by at least 1 pixel pos. difference

term_crit = ( cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 10, 1 )

while (keep_processing):

# if video file successfully opens then read frame from video

if (cap.isOpened):

ret, frame = cap.read();

# start a timer (to see how long processing and display takes)

start_t = cv2.getTickCount();

# get parameters from track bars

s_lower = cv2.getTrackbarPos("s lower", windowName2);

s_upper = cv2.getTrackbarPos("s upper", windowName2);

v_lower = cv2.getTrackbarPos("v lower", windowName2);

v_upper = cv2.getTrackbarPos("v upper", windowName2);

# select region using the mouse and display it

if (len(boxes) > 1) :

crop = frame[boxes[0][1]:boxes[1][1],boxes[0][0]:boxes[1][0]].copy() # This crop variable is our image

# of the selected region

h, w, c = crop.shape; # size of template

if (h > 0) and (w > 0):

cropped = True;

# convert region to HSV

hsv_crop = cv2.cvtColor(crop, cv2.COLOR_BGR2HSV);

# select all Hue (0-> 180) and Sat. values but eliminate values with very low

# saturation or value (due to lack of useful colour information)

mask = cv2.inRange(hsv_crop, np.array((0., float(s_lower),float(v_lower))), np.array((180.,float(s_upper),float(v_upper))));

# mask = cv2.inRange(hsv_crop, np.array((0., 60.,32.)), np.array((180.,255.,255.)));

# construct a histogram of hue and saturation values and normalize it

crop_hist = cv2.calcHist([hsv_crop],[0, 1],mask,[180, 255],[0,180, 0, 255])

cv2.normalize(crop_hist,crop_hist,0,255,cv2.NORM_MINMAX)

# set intial position of object

track_window = (boxes[0][0],boxes[0][1],boxes[1][0] - boxes[0][0],boxes[1][1] - boxes[0][1]);

#### track_window has (ix,iy,w,h)

cv2.imshow(windowNameSelection,crop);

# reset list of boxes

boxes = [];

# interactive display of selection box

# Make a green colour boundary around the selected object.

if (selection_in_progress):

top_left = (boxes[0][0], boxes[0][1]);

bottom_right = (current_mouse_position[0], current_mouse_position[1]);

cv2.rectangle(frame,top_left, bottom_right, (0,0,255), 2);

# if we have a selected region

if (cropped):

# convert the entire incoming image to HSV.

img_hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV);

# back projection of histogram based on Hue and Saturation only

img_bproject = cv2.calcBackProject([img_hsv],[0,1],crop_hist,[0,180,0,255],1);

cv2.imshow(windowName2,img_bproject);

# apply camshift to predict new location (observation)

# basic HSV histogram comparision with adaptive window size

# see : http://docs.opencv.org/3.1.0/db/df8/tutorial_py_meanshift.html

ret, track_window = cv2.CamShift(img_bproject, track_window, term_crit);

# draw observation on image

x,y,w,h = track_window;

frame = cv2.rectangle(frame, (x,y), (x+w,y+h), (255,0,0),2);

# The main observation is of blur colour.

# extract centre of this observation as points

pts = cv2.boxPoints(ret)

pts = np.int0(pts)

# (cx, cy), radius = cv2.minEnclosingCircle(pts)

# use to correct kalman filter

kalman.correct(center(pts));

# get new kalman filter prediction

prediction = kalman.predict();

# draw predicton on image

frame = cv2.rectangle(frame, (prediction[0]-(0.5*w),prediction[1]-(0.5*h)), (prediction[0]+(0.5*w),prediction[1]+(0.5*h)), (0,255,0),2);

else:

# before we have cropped anything show the mask we are using

# for the S and V components of the HSV image

img_hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV);

# select all Hue values (0-> 180) but eliminate values with very low

# saturation or value (due to lack of useful colour information)

mask = cv2.inRange(img_hsv, np.array((0., float(s_lower),float(v_lower))), np.array((180.,float(s_upper),float(v_upper))));

cv2.imshow(windowName2,mask);

# display image

cv2.imshow(windowName,frame);

# stop the timer and convert to ms. (to see how long processing and display takes)

stop_t = ((cv2.getTickCount() - start_t)/cv2.getTickFrequency()) * 1000;

# start the event loop - essential

# cv2.waitKey() is a keyboard binding function (argument is the time in milliseconds).

# It waits for specified milliseconds for any keyboard event.

# If you press any key in that time, the program continues.

# If 0 is passed, it waits indefinitely for a key stroke.

# (bitwise and with 0xFF to extract least significant byte of multi-byte response)

# here we use a wait time in ms. that takes account of processing time already used in the loop

# wait 40ms or less depending on processing time taken (i.e. 1000ms / 25 fps = 40 ms)

key = cv2.waitKey(max(2, 40 - int(math.ceil(stop_t)))) & 0xFF;

# It can also be set to detect specific key strokes by recording which key is pressed

# e.g. if user presses "x" then exit

if (key == 27):

keep_processing = False;

# close all windows

cv2.destroyAllWindows()

cap.release()

else:

print("No video file specified or camera connected.");

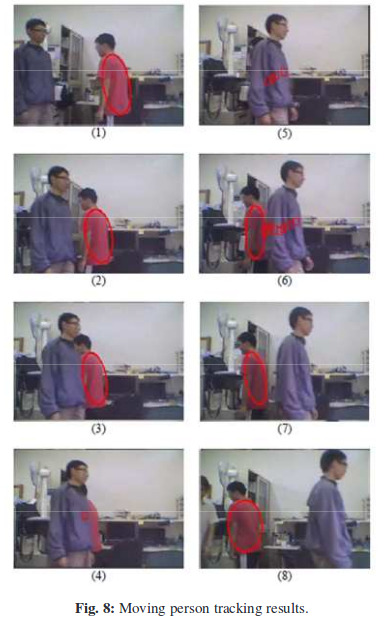

We would also like you to go through this amazing paper here which uses Kalman Filtering Based Camshift Object Tracking.

The Kalman filter can provide the estimated positions continuously.

Finally the estimation position will be the initial position of CamShift when the target appears again.

In this case, the target is occluded in 8 frames, and the Kalman filter provides the possible positions.

The square marks in (4) - (6) are the estimated positions made by Kalman filter.

When the target appears again, the CamShift will catch the target immediately based on the estimated positions.

Leave a Comment